您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

這篇文章主要講解了“怎么用Python爬取電影”,文中的講解內容簡單清晰,易于學習與理解,下面請大家跟著小編的思路慢慢深入,一起來研究和學習“怎么用Python爬取電影”吧!

首先,我用python爬取了電影的所有彈幕,這個爬蟲比較簡單,就不細說了,直接上代碼:

import requests

import pandas as pd

headers = {

"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.138 Safari/537.36"

}

url = 'https://mfm.video.qq.com/danmu?otype=json&target_id=6480348612%26vid%3Dh0035b23dyt'

# 最終得到的能控制彈幕的參數是target_id和timestamp,tiemstamp每30請求一個包。

comids=[]

comments=[]

opernames=[]

upcounts=[]

timepoints=[]

times=[]

n=15

while True:

data = {

"timestamp":n}

response = requests.get(url,headers=headers,params=data,verify=False)

res = eval(response.text) #字符串轉化為列表格式

con = res["comments"]

if res['count'] != 0: #判斷彈幕數量,確實是否爬取結束

n+=30

for j in con:

comids.append(j['commentid'])

opernames.append(j["opername"])

comments.append(j["content"])

upcounts.append(j["upcount"])

timepoints.append(j["timepoint"])

else:

break

data=pd.DataFrame({'id':comids,'name':opernames,'comment':comments,'up':upcounts,'pon':timepoints})

data.to_excel('發財日記彈幕.xlsx')首先用padans讀取彈幕數據

import pandas as pd

data=pd.read_excel('發財日記彈幕.xlsx')

data

近4萬條彈幕,5列數據分別為“評論id”“昵稱”“內容”“點贊數量”“彈幕位置”

將電影以6分鐘為間隔分段,看每個時間段內彈幕的數量變化情況:

time_list=['{}'.format(int(i/60))for i in list(range(0,8280,360))]

pero_list=[]

for i in range(len(time_list)-1):

pero_list.append('{0}-{1}'.format(time_list[i],time_list[i+1]))

counts=[]

for i in pero_list:

counts.append(int(data[(data.pon>=int(i.split('-')[0])*60)&(data.pon<int(i.split('-')[1])*60)]['pon'].count()))

import pyecharts.options as opts

from pyecharts.globals import ThemeType

from pyecharts.charts import Line

line=(

Line({"theme": ThemeType.DARK})

.add_xaxis(xaxis_data=pero_list)

.add_yaxis("",list(counts),is_smooth=True)

.set_global_opts(

xaxis_opts=opts.AxisOpts(axislabel_opts=opts.LabelOpts(rotate=-15),name="電影時長"),

title_opts=opts.TitleOpts(title="不同時間彈幕數量變化情況"),

yaxis_opts=opts.AxisOpts(name="彈幕數量"),

)

)

line.render_notebook()從彈幕數量變化來看,早60分鐘,120分鐘左右分別出現2個峰值,說明這部電影至少有2個高潮

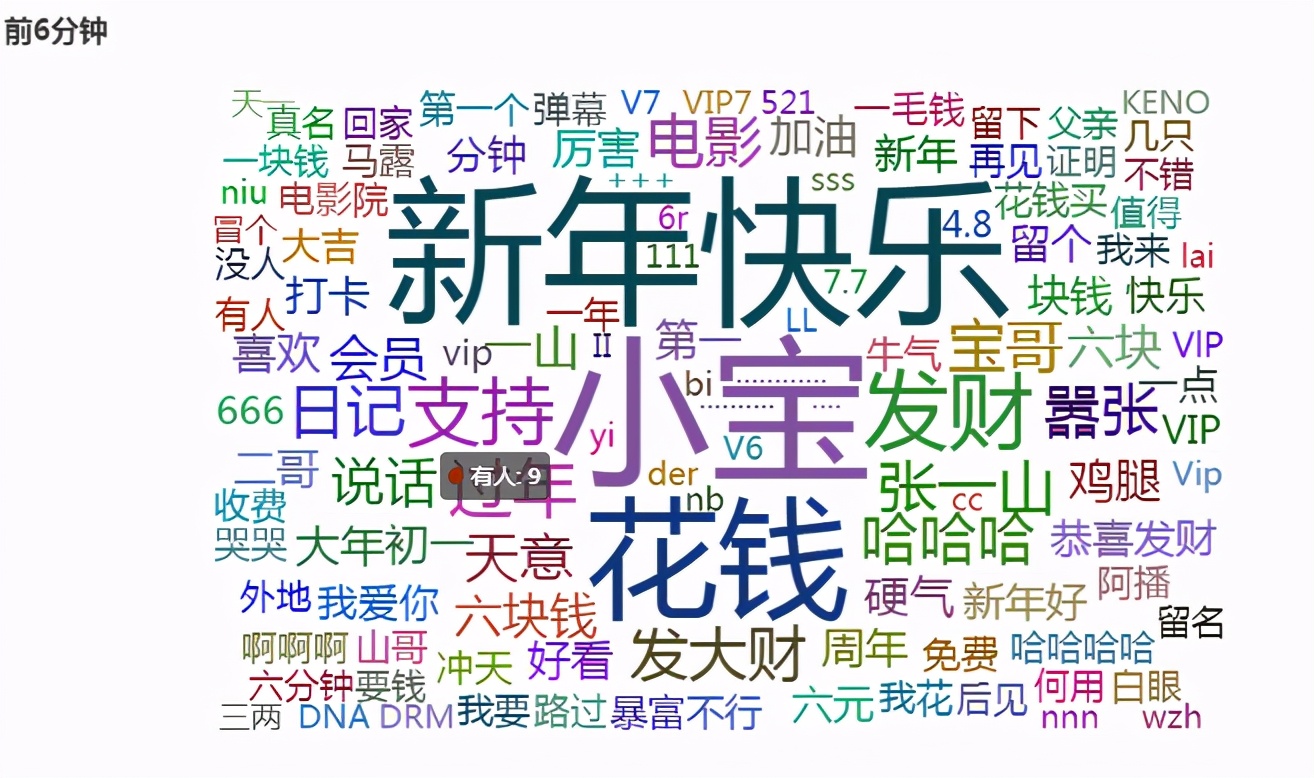

為了滿足好奇心,我們一起分析一下前6分鐘(不收費)以及2個前面大家都在說什么

#詞云代碼

import jieba #詞語切割

import wordcloud #分詞

from wordcloud import WordCloud,ImageColorGenerator,STOPWORDS #詞云,顏色生成器,停止

from pyecharts.charts import WordCloud

from pyecharts.globals import SymbolType

from pyecharts import options as opts

def ciyun(content):

segment = []

segs = jieba.cut(content) # 使用jieba分詞

for seg in segs:

if len(seg) > 1 and seg != '\r\n':

segment.append(seg)

# 去停用詞(文本去噪)

words_df = pd.DataFrame({'segment': segment})

words_df.head()

stopwords = pd.read_csv("stopword.txt", index_col=False,

quoting=3, sep='\t', names=['stopword'], encoding="utf8")

words_df = words_df[~words_df.segment.isin(stopwords.stopword)]

words_stat = words_df.groupby('segment').agg(count=pd.NamedAgg(column='segment', aggfunc='size'))

words_stat = words_stat.reset_index().sort_values(by="count", ascending=False)

return words_statdata_6_text=''.join(data[(data.pon>=0)&(data.pon<360)]['comment'].values.tolist())

words_stat=ciyun(data_6_text)

from pyecharts import options as opts

from pyecharts.charts import WordCloud

from pyecharts.globals import SymbolType

words = [(i,j) for i,j in zip(words_stat['segment'].values.tolist(),words_stat['count'].values.tolist())]

c = (

WordCloud()

.add("", words, word_size_range=[20, 100], shape=SymbolType.DIAMOND)

.set_global_opts(title_opts=opts.TitleOpts(title="{}".format('前6分鐘')))

)

c.render_notebook()

排名第一的是“小寶”,還出現了“好看”“支持”等字樣,看來還是小寶還是挺受歡迎的

data_60_text=''.join(data[(data.pon>=54*60)&(data.pon<3600)]['comment'].values.tolist())

words_stat=ciyun(data_60_text)

from pyecharts import options as opts

from pyecharts.charts import WordCloud

from pyecharts.globals import SymbolType

words = [(i,j) for i,j in zip(words_stat['segment'].values.tolist(),words_stat['count'].values.tolist())]

c = (

WordCloud()

.add("", words, word_size_range=[20, 100], shape=SymbolType.DIAMOND)

.set_global_opts(title_opts=opts.TitleOpts(title="{}".format('第一個高潮')))

)

c.render_notebook()排在前面的分別是“小寶”“二哥”“哈哈哈”“好看”等,說明肯定是小寶和二哥發生了什么搞笑的事

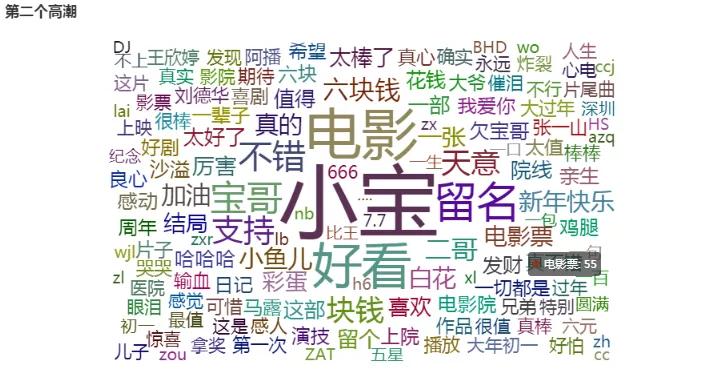

data_60_text=''.join(data[(data.pon>=120*60)&(data.pon<128*60)]['comment'].values.tolist())

words_stat=ciyun(data_60_text)

from pyecharts import options as opts

from pyecharts.charts import WordCloud

from pyecharts.globals import SymbolType

words = [(i,j) for i,j in zip(words_stat['segment'].values.tolist(),words_stat['count'].values.tolist())]

c = (

WordCloud()

.add("", words, word_size_range=[20, 100], shape=SymbolType.DIAMOND)

.set_global_opts(title_opts=opts.TitleOpts(title="{}".format('第二個高潮')))

)

c.render_notebook()

高頻詞中,發現“好看”“淚點”“哭哭”等字樣,說明電影的結尾很感人

我們接著再挖一下發彈幕最多的人,看看他們都在說什么,因為部分彈幕沒有昵稱,所以需要先踢除:

data1=data[data['name'].notna()]

data2=pd.DataFrame({'num':data1.value_counts(subset="name")}) #統計出現次數

data3=data2.reset_index()

data3[data3.num>100] #找出彈幕數量大于100的人data_text=''

for i in data3['name'].values.tolist():

data_text+=''.join(data[data.name==i]['comment'].values.tolist())

words_stat=ciyun(data_text)

from pyecharts import options as opts

from pyecharts.charts import WordCloud

from pyecharts.globals import SymbolType

words = [(i,j) for i,j in zip(words_stat['segment'].values.tolist(),words_stat['count'].values.tolist())]

c = (

WordCloud()

.add("", words, word_size_range=[20, 100], shape=SymbolType.DIAMOND)

.set_global_opts(title_opts=opts.TitleOpts(title="{}".format('粉絲彈幕')))

)

c.render_notebook()感謝各位的閱讀,以上就是“怎么用Python爬取電影”的內容了,經過本文的學習后,相信大家對怎么用Python爬取電影這一問題有了更深刻的體會,具體使用情況還需要大家實踐驗證。這里是億速云,小編將為大家推送更多相關知識點的文章,歡迎關注!

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。