您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

不知道大家之前對類似MySQL 5.6中如何通過Keepalived+互為主從實現高可用架構的文章有無了解,今天我在這里給大家再簡單的講講。感興趣的話就一起來看看正文部分吧,相信看完MySQL 5.6中如何通過Keepalived+互為主從實現高可用架構你一定會有所收獲的。

一、測試環境

操作系統版本:Red Hat Enterprise Linux Server release 6.5 (Santiago)

Mysql版本:MySQL-5.6.38-1.el6.x86_64.rpm-bundle.tar

keepalived版本:keepalived-1.2.7-3.el6.x86_64.rpm

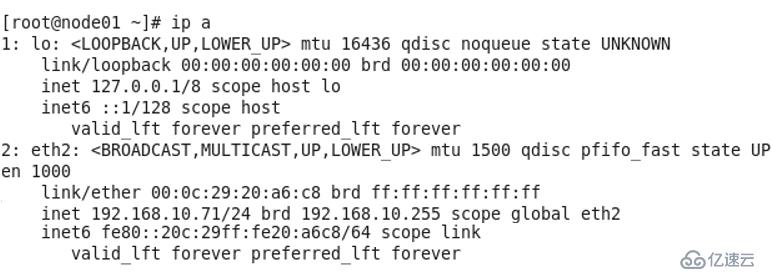

node01:192.168.10.71

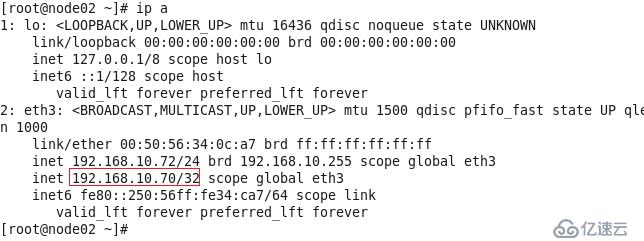

node02:192.168.10.72

VIP:192.168.10.70

二、配置node01為主、node02為從的主從同步

1、node01和node02分別安裝好Mysql 5.6.38,安裝方法請參考上一篇博文《MySQL 5.6.38在RedHat 6.5上通過RPM包安裝》。

2、在node01和node02分別編輯/etc/my.cnf,配置如下:

[mysqld]

log-bin=mysql-bin

server-id = 1

[mysqld_safe]

log-error = /var/log/mysqld.log

pid-file = /var/run/mysqld/mysqld.pid

replicate-do-db = all

3、重啟mysql服務

[root@node01 ~]# service mysql restart

[root@node02 ~]# service mysql restart

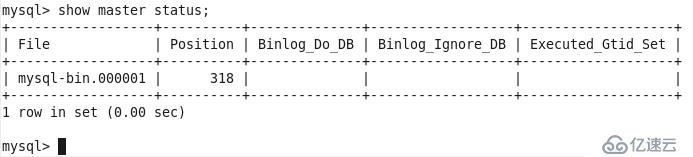

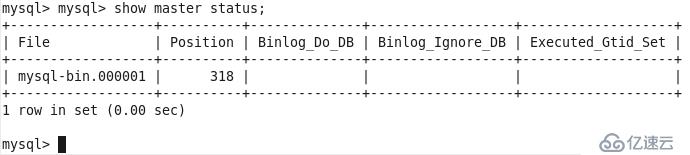

4、登錄node01的mysql,創建用于同步的賬戶repl,密碼為123456,并查詢master狀態,記下file名稱和posttion數值

mysql> GRANT REPLICATION SLAVE ON *.* to 'repl'@'%' identified by '123456';

Query OK, 0 rows affected (0.00 sec)

mysql> show master status;

5、登錄node02的mysql,執行以下語句開啟從云服務器,注意master_host要填寫node01的IP

mysql> change master to master_host='192.168.10.71',master_user='repl',master_password='123456',master_log_file='mysql-bin.000001',master_log_pos=318;

Query OK, 0 rows affected, 2 warnings (0.36 sec)

命令參數解釋: master_host='192.168.10.71' ## Master 的 IP 地址 master_user='repl' ## 用于同步數據的用戶(在 Master中授權的用戶) master_password='123456' ## 同步數據用戶的密碼 master_log_file='mysql-bin.000001' ##指定 Slave 從哪個日志文 件開始讀復制數據(可在 Master 上使用 show master status 查看到日志文件名) master_log_pos=429 ## 從哪個 POSITION 號開始讀

mysql> start slave;

Query OK, 0 rows affected (0.03 sec)

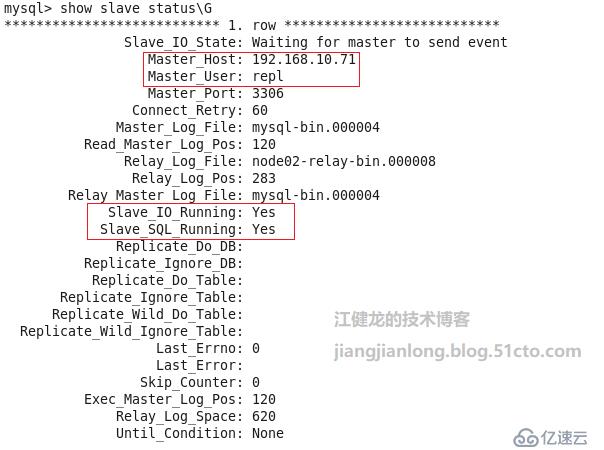

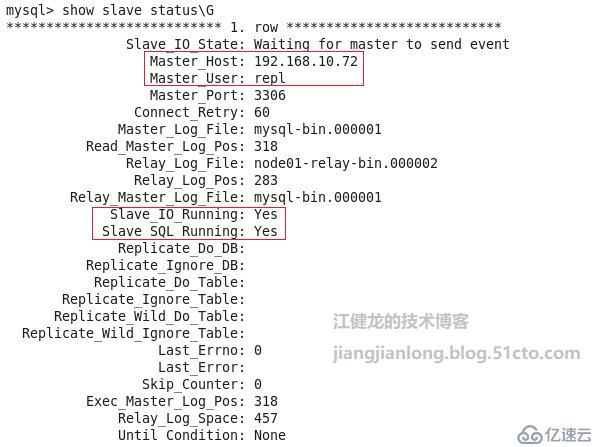

6、查詢從服務的狀態,狀態正常

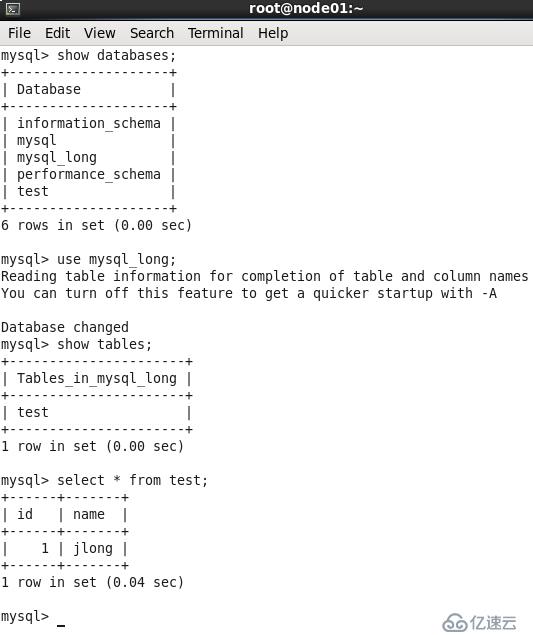

7、在node01創建一個數據庫、一個表并插入一行數據,用于測試node02是否能同步過去

mysql> create database mysql_long;

Query OK, 1 row affected (0.00 sec)

mysql> use mysql_long;

Database changed

mysql> create table test(id int(3),name char(5));

Query OK, 0 rows affected (0.13 sec)

mysql> insert into test values (001,'jlong');

Query OK, 1 row affected (0.01 sec)

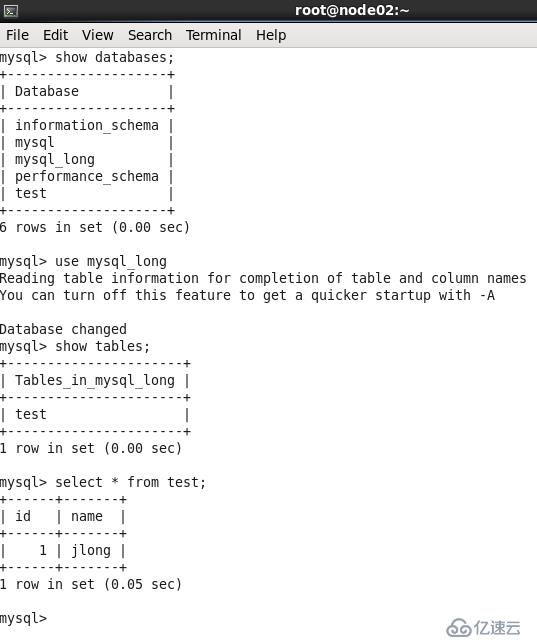

8、登錄到node02的Mysql,同步正常

三、配置node02為主、node01為從的主從同步

1、登錄node02的mysql,創建用于同步的賬戶repl,密碼為123456,并查詢master狀態,記下file名稱和posttion數值,并查詢master狀態

mysql> GRANT REPLICATION SLAVE ON *.* to 'repl'@'%' identified by '123456';

Query OK, 0 rows affected (0.00 sec)

Query OK, 0 rows affected (0.10 sec)

2、登錄node01的mysql,執行以下語句開啟從云服務器,注意這里master_host要填寫node02的IP

mysql> change master to master_host='192.168.10.72',master_user='repl',master_password='123456',master_log_file='mysql-bin.000001',master_log_pos=318;

Query OK, 0 rows affected, 2 warnings (0.36 sec)

mysql> start slave;

Query OK, 0 rows affected (0.03 sec)

3、查詢從服務的狀態,狀態正常

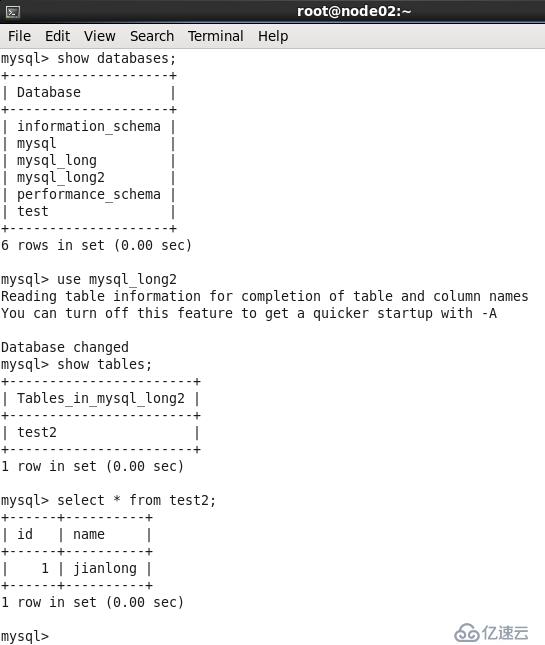

4、在node02創建一個數據庫、一個表并插入一行數據,用于測試node01是否能同步過去

mysql> create database mysql_long2;

Query OK, 1 row affected (0.00 sec)

mysql> use mysql_long2;

Database changed

mysql> create table test2(id int(3),name char(10));

Query OK, 0 rows affected (0.71 sec)

mysql> insert into test2 values (001,'jianlong');

Query OK, 1 row affected (0.00 sec

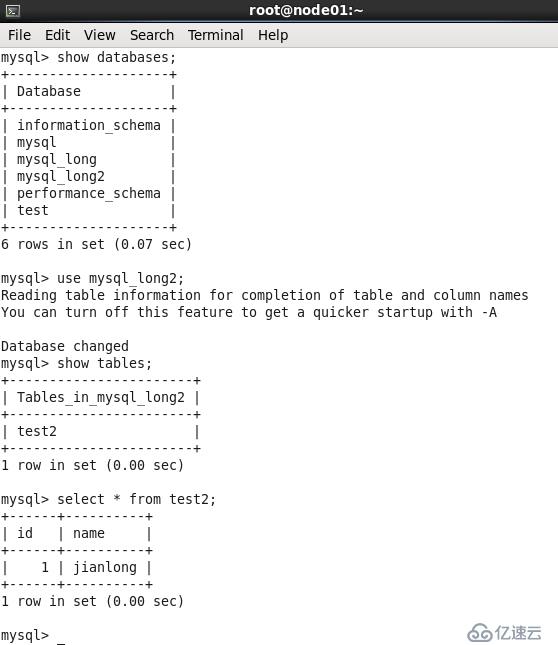

5、node01同步正常。

這樣互為主從就配置好了,兩臺機既是對方的Master,又是對方的Slave,無論在哪一臺機上數據發生了變化,另一臺都能及時進行同步數據,下面我們開始配置keepalived實現高可用。

四、keepalived配置

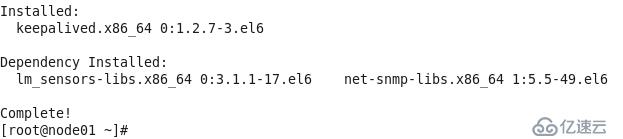

1、在node01上使用yum安裝keepalived

[root@node01 ~]# yum install keepalived -y

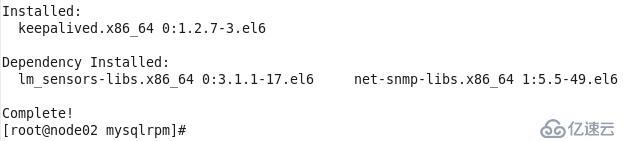

2、在node02上也使用yum安裝keepalived

[root@node02 ~]# yum install keepalived -y

3、編輯node01的keepalived的配置文件

[root@node01 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from

Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP ##node01和node02都配置成BACKUP,角色由優先級確定

interface eth3

virtual_router_id 71

priority 100 ##node01的優先級設置比node02的高

advert_int 1

nopreempt ##設置不搶占(需在BACKUP狀態下設置才有效)

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.10.70

}

}

virtual_server

192.168.10.70 3306 {

delay_loop 6

lb_algo wrr

lb_kind DR

nat_mask 255.255.255.0

persistence_timeout 50

protocol TCP

real_server 192.168.10.71 3306 {

weight 100

notify_down /etc/keepalived/stopkeepalived.sh #3306端口不可用則執行腳本

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 3306

}

}

}4、編輯node02的keepalived的配置文件

[root@node02 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from

Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP ##node01和node02都配置成BACKUP,角色由優先級確定

interface eth4

virtual_router_id 71

priority 90 ##node02的優先級設置比node01的低

advert_int 1

nopreempt ##設置不搶占(需在BACKUP狀態下設置才有效)

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.10.70

}

}

virtual_server

192.168.10.70 3306 {

delay_loop 6

lb_algo wrr

lb_kind DR

nat_mask 255.255.255.0

persistence_timeout 50

protocol TCP

real_server 192.168.10.72 3306 {

weight 100

notify_down /etc/keepalived/stopkeepalived.sh #3306端口不可用則執行腳本

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 3306

}

}

}

[root@node02 ~]#5、node01和node02都編輯一個keepalived的自殺腳本/etc/keepalived/stopalived.sh,一旦檢測到Mysql的3306端口不通,便執行此腳本觸發vip的切換,腳本內容很簡單,就是service keepalived stop就行,因為keepalived服務停止便會觸發高可用的切換動作。

6、啟動keepalived服務

[root@node01 ~]# service keepalived start

Starting keepalived: [ OK ]

[root@node02 ~]# service keepalived start

Starting keepalived: [ OK ]

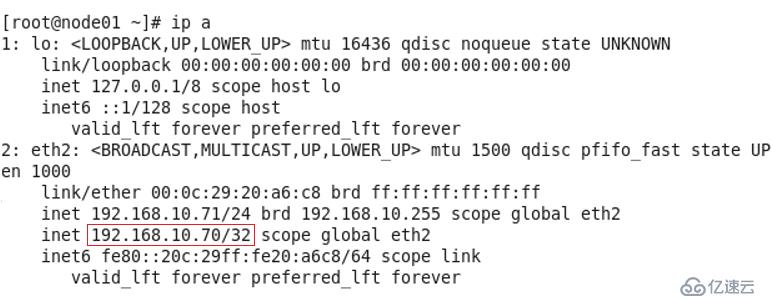

7、觀察node01的message日志,由于node01的優先級高,因此進入了master角色,vip也已經加上了

[root@node01~]# tail -f /var/log/messages Oct 30 22:47:46 node01 Keepalived[3170]: Starting Keepalived v1.2.7 (09/26,2012) Oct 30 22:47:46 node01 Keepalived[3171]: Starting Healthcheck child process, pid=3173 Oct 30 22:47:46 node01 Keepalived[3171]: Starting VRRP child process, pid=3174 Oct 30 22:47:46 node01 Keepalived_vrrp[3174]: Interface queue is empty Oct 30 22:47:46 node01 Keepalived_vrrp[3174]: Netlink reflector reports IP 192.168.10.71 added Oct 30 22:47:46 node01 Keepalived_vrrp[3174]: Netlink reflector reports IP fe80::20c:29ff:fe20:a6c8 added Oct 30 22:47:46 node01 Keepalived_vrrp[3174]: Registering Kernel netlink reflector Oct 30 22:47:46 node01 Keepalived_vrrp[3174]: Registering Kernel netlink command channel Oct 30 22:47:46 node01 Keepalived_vrrp[3174]: Registering gratuitous ARP shared channel Oct 30 22:47:46 node01 kernel: IPVS: Registered protocols (TCP, UDP, SCTP, AH, ESP) Oct 30 22:47:46 node01 kernel: IPVS: Connection hash table configured (size=4096,memory=64Kbytes) Oct 30 22:47:46 node01 kernel: IPVS: ipvs loaded. Oct 30 22:47:46 node01 Keepalived_vrrp[3174]: Opening file '/etc/keepalived/keepalived.conf'. Oct 30 22:47:46 node01 Keepalived_vrrp[3174]: Configuration is using : 63319 Bytes Oct 30 22:47:46 node01 Keepalived_vrrp[3174]: Using LinkWatch kernel netlink reflector... Oct 30 22:47:46 node01 Keepalived_healthcheckers[3173]: Interface queue is empty Oct 30 22:47:46 node01 Keepalived_healthcheckers[3173]: Netlink reflector reports IP 192.168.10.71 added Oct 30 22:47:46 node01 Keepalived_healthcheckers[3173]: Netlink reflector reports IP fe80::20c:29ff:fe20:a6c8 added Oct 30 22:47:46 node01 Keepalived_healthcheckers[3173]: Registering Kernel netlink reflector Oct 30 22:47:46 node01 Keepalived_healthcheckers[3173]: Registering Kernel netlink command channel Oct 30 22:47:46 node01 Keepalived_healthcheckers[3173]: Opening file '/etc/keepalived/keepalived.conf' Oct 30 22:47:46 node01 Keepalived_healthcheckers[3173]: Configuration is using : 11970 Bytes Oct 30 22:47:46 node01 Keepalived_vrrp[3174]: VRRP sockpool: [ifindex(2), proto(112), fd(11,12)] Oct 30 22:47:46 node01 Keepalived_healthcheckers[3173]: Using LinkWatch kernel netlink reflector... Oct 30 22:47:46 node01 Keepalived_healthcheckers[3173]: Activating healthchecker for service [192.168.10.71]:3306 Oct 30 22:47:46 node01 kernel: IPVS: [wrr] scheduler registered. Oct 30 22:47:47 node01 Keepalived_vrrp[3174]: VRRP_Instance(VI_1) Transition to MASTER STATE Oct 30 22:47:48 node01 Keepalived_vrrp[3174]: VRRP_Instance(VI_1) Entering MASTER STATE Oct 30 22:47:48 node01 Keepalived_vrrp[3174]: VRRP_Instance(VI_1) setting protocol VIPs. Oct 30 22:47:48 node01 Keepalived_vrrp[3174]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth3 for 192.168.10.70 Oct 30 22:47:48 node01 Keepalived_healthcheckers[3173]: Netlink reflector reports IP 192.168.10.70 added

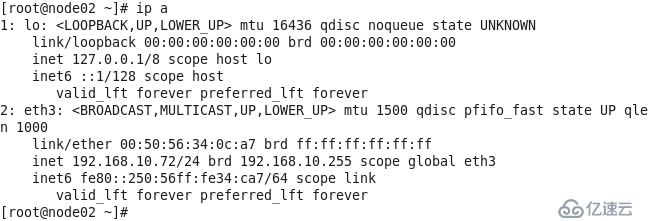

8、觀察node02的message日志,node02進入了BACKUP角色,VIP自然不會加上。

[root@node02 ~]# tail -f /var/log/messages Oct 30 22:48:54 node02 Keepalived[16633]: Starting Keepalived v1.2.7 (09/26,2012) Oct 30 22:48:54 node02 Keepalived[16634]: Starting Healthcheck child process, pid=16636 Oct 30 22:48:54 node02 Keepalived[16634]: Starting VRRP child process, pid=16637 Oct 30 22:48:54 node02 Keepalived_vrrp[16637]: Interface queue is empty Oct 30 22:48:54 node02 Keepalived_healthcheckers[16636]: Interface queue is empty Oct 30 22:48:54 node02 Keepalived_vrrp[16637]: Netlink reflector reports IP 192.168.10.72 added Oct 30 22:48:54 node02 Keepalived_vrrp[16637]: Netlink reflector reports IP fe80::250:56ff:fe34:ca7 added Oct 30 22:48:54 node02 Keepalived_vrrp[16637]: Registering Kernel netlink reflector Oct 30 22:48:54 node02 Keepalived_vrrp[16637]: Registering Kernel netlink command channel Oct 30 22:48:54 node02 Keepalived_vrrp[16637]: Registering gratuitous ARP shared channel Oct 30 22:48:54 node02 Keepalived_vrrp[16637]: Opening file '/etc/keepalived/keepalived.conf'. Oct 30 22:48:54 node02 Keepalived_healthcheckers[16636]: Netlink reflector reports IP 192.168.10.72 added Oct 30 22:48:54 node02 Keepalived_healthcheckers[16636]: Netlink reflector reports IP fe80::250:56ff:fe34:ca7 added Oct 30 22:48:54 node02 Keepalived_healthcheckers[16636]: Registering Kernel netlink reflector Oct 30 22:48:54 node02 Keepalived_healthcheckers[16636]: Registering Kernel netlink command channel Oct 30 22:48:54 node02 Keepalived_healthcheckers[16636]: Opening file'/etc/keepalived/keepalived.conf'. Oct 30 22:48:54 node02 Keepalived_healthcheckers[16636]: Configuration is using : 11988 Bytes Oct 30 22:48:54 node02 Keepalived_vrrp[16637]: Configuration is using : 63337 Bytes Oct 30 22:48:54 node02 Keepalived_vrrp[16637]: Using LinkWatch kernel netlink reflector... Oct 30 22:48:54 node02 Keepalived_vrrp[16637]: VRRP_Instance(VI_1) Entering BACKUP STATE Oct 30 22:48:54 node02 Keepalived_healthcheckers[16636]: Using LinkWatch kernel netlink reflector... Oct 30 22:48:54 node02 Keepalived_vrrp[16637]: VRRP sockpool: [ifindex(2), proto(112),fd(10,11)] Oct 30 22:48:54 node02 Keepalived_healthcheckers[16636]: Activating healthchecker for service [192.168.10.72]:3306

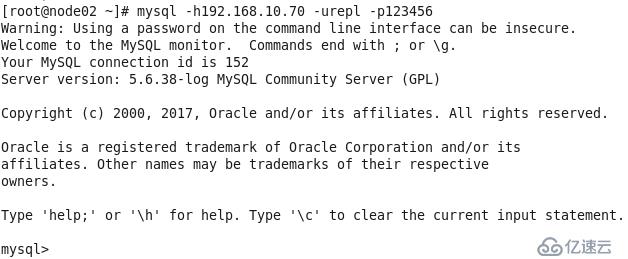

9、測試vip是否可能用來登錄mysql,由于此時vip在node01上,我們就在node02上使用vip來測試登錄,可見是沒有問題的

五、Mysql高可用測試

1、停止node01的mysql服務,3306端口自然不通,keepalived檢測到3306端口不通后執行自殺腳本停止自身服務,VIP被移除釋放出來

[root@node01 ~]# service mysql stop Shutting down MySQL.. [ OK ]

2、觀察node01的messages日志可以明顯看出整個過程,VIP也已經不見了

[root@node01 ~]# tail -f /var/log/messages Oct 30 23:06:42 node01 Keepalived_healthcheckers[3173]: TCP connection to [192.168.10.71]:3306 failed !!! Oct 30 23:06:42 node01 Keepalived_healthcheckers[3173]: Removing service [192.168.10.71]:3306 from VS [192.168.10.70]:3306 Oct 30 23:06:42 node01 Keepalived_healthcheckers[3173]: Executing [/etc/keepalived/stopkeepalived.sh] for service [192.168.10.71]:3306 in VS [192.168.10.70]:3306 Oct 30 23:06:42 node01 Keepalived_healthcheckers[3173]: Lost quorum 1-0=1 > 0 for VS [192.168.10.70]:3306 Oct 30 23:06:42 node01 Keepalived_healthcheckers[3173]: SMTP connection ERROR to [192.168.200.1]:25. Oct 30 23:06:42 node01 kernel: IPVS: __ip_vs_del_service: enter Oct 30 23:06:42 node01 Keepalived[3171]: Stopping Keepalived v1.2.7 (09/26,2012) Oct 30 23:06:42 node01 Keepalived_vrrp[3174]: VRRP_Instance(VI_1) sending 0 priority Oct 30 23:06:42 node01 Keepalived_vrrp[3174]: VRRP_Instance(VI_1) removing protocol VIPs.

3、觀察node02的messages日志,可以看到node02進入了MASTER角色,接管了VIP,VIP已經加上,從日志的時間看,切換的過程不過花了1秒,可說是秒級切換了。

[root@node02 ~]# tail -f /var/log/messages Oct 30 23:06:42 node02 Keepalived_vrrp[16637]: VRRP_Instance(VI_1) Transition to MASTER STATE Oct 30 23:06:43 node02 Keepalived_vrrp[16637]: VRRP_Instance(VI_1) Entering MASTER STATE Oct 30 23:06:43 node02 Keepalived_vrrp[16637]: VRRP_Instance(VI_1) setting protocol VIPs. Oct 30 23:06:43 node02 Keepalived_vrrp[16637]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth4 for 192.168.10.70 Oct 30 23:06:43 node02 Keepalived_healthcheckers[16636]: Netlink reflector reports IP 192.168.10.70 added

由于node01的keepalived進程被自殺腳本停止了,因此需要手動啟動。之前我想是否需要跑一個監控腳本把keepalived服務自動開起來呢,后來我覺得不必要,因為如果mysql的服務依然異常,就算keepalived的服務起來了,它檢測到本機的3306端口不通,還是會再次自殺。而既然mysql服務已經異常、端口都不通了,一般也是需要手動檢查干預把mysql啟動起來的,因此在mysql服務正常后再順便手動起一下keepalived就好了。

看完MySQL 5.6中如何通過Keepalived+互為主從實現高可用架構這篇文章,大家覺得怎么樣?如果想要了解更多相關,可以繼續關注我們的行業資訊板塊。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。