您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

?環境準備?

192.168.222.247? k8s-master centos 7.5

192.168.222.250? ?k8s-node1 centos 7.5

基礎環境準備

關閉防火墻

systemctl stop firewalld

systemctl disable firewalld

關閉seliunx

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0

關閉swap

swapoff -a ?$ 臨時

vim /etc/fstab ?$ 永久

設置好主機名

hostnamectl set-hostname? k8s-master

hostnamectl set-hostname? k8s-node1

5.將橋接的 IPv4 流量傳遞到 iptables 的鏈:

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

如果不進行這一步的設置,kubadm init?自檢時會報錯

配置yum 源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum clean all

yum makecache

安裝阿里云的鏡像源

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

yum -y install docker-ce-18.06.1.ce-3.el7

啟動 docker

systemctl enable docker && systemctl start docker

docker --version? # 查看docker 版本

安裝 kubeadm,kubelet和kubectl

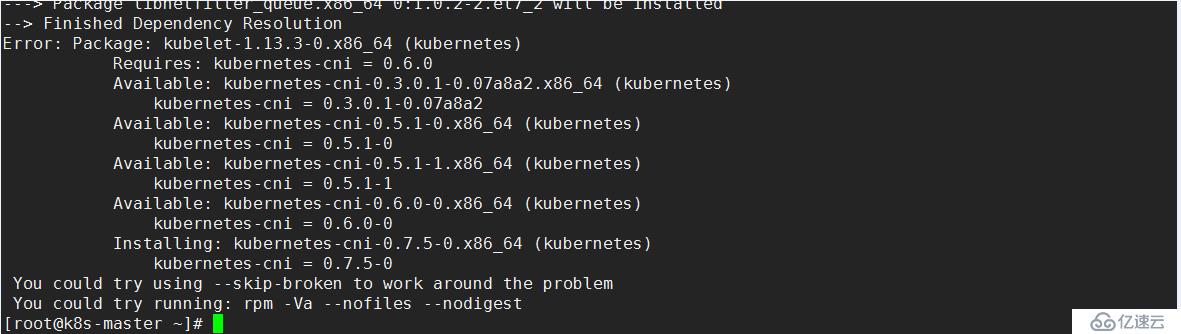

yum install -y kubelet-1.13.3 kubeadm-1.13.3 kubectl-1.13.3

指定軟件的安裝版本 ,但是安裝的時候會報錯

No package kubernetes-cni=0.6.0 available.

解決方法

CNI是Container Network Interface的是一個標準的,通用的接口。現在容器平臺:docker,kubernetes,mesos,容器網絡解決方案:flannel,calico,weave。只要提供一個標準的接口,就能為同樣滿足該協議的所有容器平臺提供網絡功能,而CNI正是這樣的一個標準接口協議。

yum 源安裝導致版本沖突 ,解決方法尋找 yum 安裝依賴的rpm 包,

包怎么找呢

參考k8s官方yum倉庫配置, 我們可以在https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64/repodata/primary.xml配置文件中找到rpm包的下載鏈接

rpm包下載前綴是https://yum.kubernetes.io

pool/fe33057ffe95bfae65e2f269e1b05e99308853176e24a4d027bc082b471a07c0-kubernetes-cni-0.6.0-0.x86_64.rpm

<location?href="../../pool/fe33057ffe95bfae65e2f269e1b05e99308853176e24a4d027bc082b471a07c0-kubernetes-cni-0.6.0-0.x86_64.rpm"/>

40ff4cf56f1b01f7415f0a4708e190cff5fbf037319c38583c52ae0469c8fcf3-kubeadm-1.11.1-0.x86_64.rpm

地址拼接:

https://yum.kubernetes.io?+?pool/fe33057ffe95bfae65e2f269e1b05e99308853176e24a4d027bc082b471a07c0 +? 應用版本 如?kubernetes-cni-0.6.0-0.x86_64.rpm

找那些包呢

cri-tools-1.11.0-0.x86_64.rpm

kubectl-1.11.1-0.x86_64.rpm

socat-1.7.3.2-2.el7.x86_64.rpm

kubelet-1.11.1-0.x86_64.rpm

kubernetes-cni-0.6.0-0.x86_64.rpm

kubeadm-1.11.1-0.x86_64.rpm

socat-1.7.3.2-2.el7.x86_64.rpm 不能在配置文件中找到下載地址,直接使用 yum 安裝

包的安裝順序呢

yum install socat -y

rpm -ivh ?cri-tools-1.13.3-0.x86_64.rpm

rpm -ivh kubectl-1.13.3-0.x86_64.rpm

rpm -ivh kubelet-1.13.3-0.x86_64.rpm kubernetes-cni-0.6.0-0.x86_64.rpm

rpm -ivh kubeadm-1.13.3-0.x86_64.rpm

kubeadm 初始化?

kubeadm init \

?--apiserver-advertise-address=192.168.222.247 \

?--kubernetes-version v1.13.3 \

?--service-cidr=10.1.0.0/16\

?--pod-network-cidr=10.244.0.0/16

報錯

[ERROR ImagePull]: failed to pull image [k8s.gcr.io/kube-apiserver-amd64:v1.13.3]: exit status 1

[ERROR ImagePull]: failed to pull image [k8s.gcr.io/kube-controller-manager-amd64:v1.13.3]: exit status 1

[ERROR ImagePull]: failed to pull image [k8s.gcr.io/kube-scheduler-amd64:v1.13.3]: exit status 1

[ERROR ImagePull]: failed to pull image [k8s.gcr.io/kube-proxy-amd64:v1.13.3]: exit status 1

[ERROR ImagePull]: failed to pull image [k8s.gcr.io/pause:3.1]: exit status 1

[ERROR ImagePull]: failed to pull image [k8s.gcr.io/etcd-amd64:3.2.24]: exit status 1

[ERROR ImagePull]: failed to pull image [k8s.gcr.io/coredns:1.1.3]: exit status 1

初始的鏡像沒有,×××,或者想辦法提前下載好鏡像,幸好阿里云提供下載的鏡像地址 前綴:registry.cn-hangzhou.aliyuncs.com/google_containers

#coding:utf-8

#!/usr/bin/ python

import os

import subprocess

old_base_url = 'registry.cn-hangzhou.aliyuncs.com/google_containers/'

new_base_url = 'k8s.gcr.io/'

pull_list = [

'kube-apiserver-amd64:v1.13.3',

'kube-controller-manager-amd64:v1.13.3',

'kube-scheduler-amd64:v1.13.3',

'kube-proxy-amd64:v1.13.3',

'pause:3.1',

'etcd-amd64:3.2.18',

'coredns:1.1.3'

]

for container in pull_list:

old_container = os.path.join(old_base_url, container)

new_container = os.path.join(new_base_url, container)

print("開始拉取鏡像%s......" % old_container)

os.system("docker pull {}".format(old_container))

print("==============鏡像開始重命名==========")

os.system("docker tag %s %s" % (old_container, new_container))

print("開始刪掉老鏡像%s"%old_container)

os.system("docker rmi %s" % (old_container))

print("===================================")

kubeadm init 初始化

kubeadm init --apiserver-advertise-address=192.168.222.247? --kubernetes-version v1.13.3 --service-cidr=10.1.0.0/16? --pod-network-cidr=10.244.0.0/16

輸出以下日志?

[init] using Kubernetes version: v1.13.3

[preflight] running pre-flight checks

[WARNING KubernetesVersion]: kubernetes version is greater than kubeadm version. Please consider to upgrade kubeadm. kubernetes version: 1.13.3. Kubeadm version: 1.11.x

I0421 02:16:48.942948 ? 19072 kernel_validator.go:81] Validating kernel version

I0421 02:16:48.943124 ? 19072 kernel_validator.go:96] Validating kernel config

[WARNING SystemVerification]: docker version is greater than the most recently validated version. Docker version: 18.06.1-ce. Max validated version: 17.03

[preflight/images] Pulling images required for setting up a Kubernetes cluster

[preflight/images] This might take a minute or two, depending on the speed of your internet connection

[preflight/images] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[preflight] Activating the kubelet service

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.1.0.1 192.168.222.247]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated sa key and public key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] Generated etcd/ca certificate and key.

[certificates] Generated etcd/server certificate and key.

[certificates] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [127.0.0.1 ::1]

[certificates] Generated etcd/peer certificate and key.

[certificates] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.222.247 127.0.0.1 ::1]

[certificates] Generated etcd/healthcheck-client certificate and key.

[certificates] Generated apiserver-etcd-client certificate and key.

[certificates] valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests"

[init] this might take a minute or longer if the control plane images have to be pulled

[apiclient] All control plane components are healthy after 49.545530 seconds

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.13" in namespace kube-system with the configuration for the kubelets in the cluster

[markmaster] Marking the node k8s-master as master by adding the label "node-role.kubernetes.io/master=''"

[markmaster] Marking the node k8s-master as master by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-master" as an annotation

[bootstraptoken] using token: e9tdub.hh2rmlrmbd83leja

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

?mkdir -p $HOME/.kube

?sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

?sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

?https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

?kubeadm join 192.168.222.247:6443 --token e9tdub.hh2rmlrmbd83leja --discovery-token-ca-cert-hash sha256:e3c9f57bb590bd7d5086864239ded2beaa6c4c640a35835bd2ca4add68373499

### 奇怪

[WARNING KubernetesVersion]: kubernetes version is greater than kubeadm version. Please consider to upgrade kubeadm. kubernetes version: 1.13.3. Kubeadm version: 1.11.x

有可能是之前下載的 kubeadm 版本不對?

升級下版本 ,或者卸載重新安裝?

yum upgrade kubeadm-1.13.3

kubeadm reset? # 重新初始化

然后重新kubeadm init 一下

使用kubectl工具: 操作

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

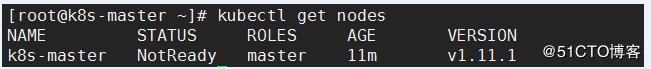

查看集群狀態?

ps 這里是1.11.1的圖,后面是 升級后 1.13.3?

安裝Pod網絡插件(CNI)

kubectl apply -f

https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml安裝完網絡插件 集群集群的狀態正常了

#操作將 master 節點的鏡像 導入到 node 節點上

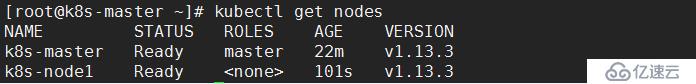

在node 節點上執行??

kubeadm join 192.168.222.247:6443 --token v8se4d.oj94uy1q308yqt83 --discovery-token-ca-cert-hash sha256:b5ba2f86cd1df0e8c457ce3377f92437c6592f299ee4ffea123d214af6e4d9ea --ignore-preflight-errors=all? # 加上?ignore 要不然報錯

出現如下提示,表示加入集群成功

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

查看集群狀態 ,狀態成功了

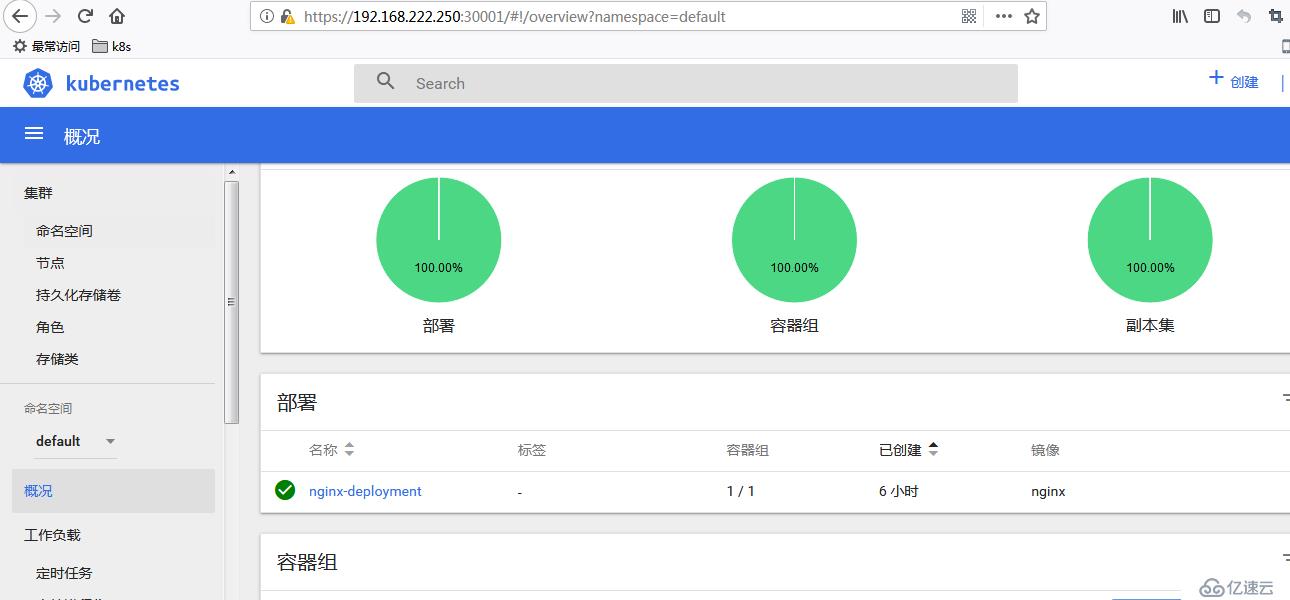

?測試kubernetes集群

kubectl create deployment nginx --image=nginx

kubectl expose deployment nginx --port=80 --type=NodePort

kubectl get pod,svc

訪問地址:https://NodeIP:Port? ?# 注意是 https?

部署?kubernetes-dashboard-amd64

下載鏡像?

docker pull?registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1

docker tag?registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1? ?xx/kubernetes-dashboard-amd64:v.10.1

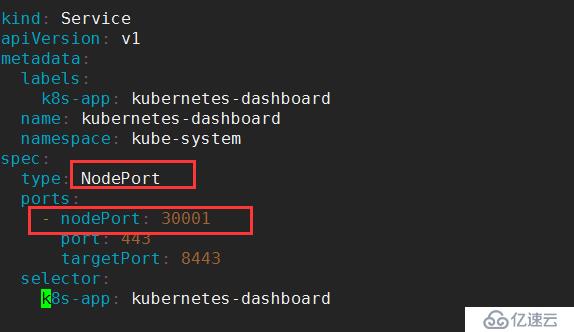

下載 yaml? 文件?

wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

修改為 nodeport 類型

部署?

kubectl create -f kubernetes-dashboard.yaml

創建service account并綁定默認cluster-admin管理員集群角色:

?kubectl create serviceaccount dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

?kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

輸出的token 值用于登錄?

登錄界面

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。