溫馨提示×

您好,登錄后才能下訂單哦!

點擊 登錄注冊 即表示同意《億速云用戶服務條款》

您好,登錄后才能下訂單哦!

- Rook是一個開源的cloud-native storage orchestrator,為各種存儲解決方案提供平臺、框架和支持,以便與云原生環境本地集成。Rook通過自動化部署、引導、配置、置備、擴展、升級、遷移、災難恢復、監控和資源管理來實現此目的。Rook使用底層云本機容器管理、調度和編排平臺提供的工具來實現它自身的功能。

1.使用Helm安裝Rook Operator

Ceph Operator Helm Chart

# helm repo add rook-release https://charts.rook.io/release

# helm install --namespace rook-ceph rook-release/rook-ceph --name rook-ceph2.在k8s集群中三個Node節點上新增一塊磁盤sdb

[root@k8s-node01 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 200G 0 disk

sdb 8:16 0 50G 0 disk 3.創建Rook Cluster

# git clone https://github.com/rook/rook.git

# cd rook/cluster/examples/kubernetes/ceph/

# kubectl apply -f cluster.yaml如果要刪除已創建的Ceph集群,需要刪除/var/lib/rook/目錄下的文件

4.部署Ceph dashboard

在cluster.yaml文件中默認已經啟用了ceph dashboard,但是默認服務類型為 ClusterIP 類型,只能集群內部訪問,如果外部訪問的話,就需要使用 NodePort 服務暴漏方式。

# kubectl get svc -n rook-ceph |grep mgr-dashboard

rook-ceph-mgr-dashboard ClusterIP 10.106.163.135 <none> 8443/TCP 6h4m

# kubectl apply -f dashboard-external-https.yaml# kubectl get svc -n rook-ceph |grep mgr-dashboard

rook-ceph-mgr-dashboard ClusterIP 10.106.163.135 <none> 8443/TCP 6h4m

rook-ceph-mgr-dashboard-external-https NodePort 10.98.230.103 <none> 8443:31656/TCP 23h

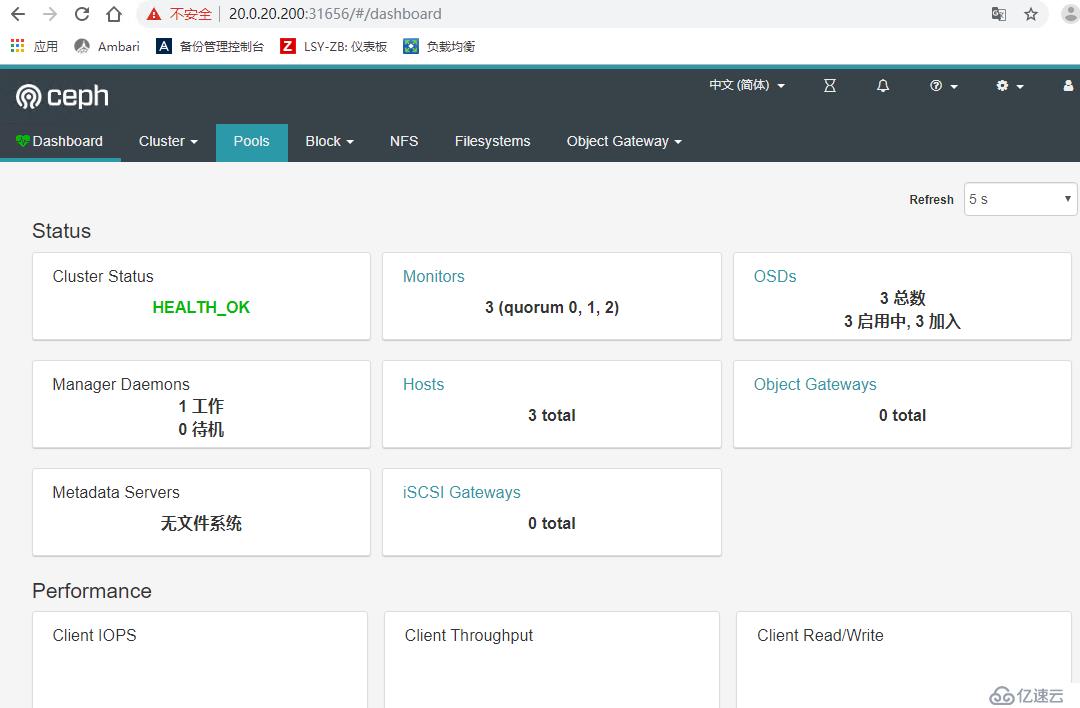

5.登錄Ceph dashboard

# kubectl cluster-info |grep master

Kubernetes master is running at https://20.0.20.200:6443

獲取登錄密碼

# kubectl get pod -n rook-ceph | grep mgr

rook-ceph-mgr-a-6b9cf7f6f6-fdhz5 1/1 Running 0 6h21m

# kubectl -n rook-ceph logs rook-ceph-mgr-a-6b9cf7f6f6-fdhz5 | grep password

debug 2019-09-20 01:09:34.166 7f51ba8d2700 0 log_channel(audit) log [DBG] : from='client.24290 -' entity='client.admin' cmd=[{"username": "admin", "prefix": "dashboard set-login-credentials", "password": "5PGcUfGey2", "target": ["mgr", ""], "format": "json"}]: dispatch

6.部署Ceph toolbox

默認啟動的Ceph集群,是開啟Ceph認證的,這樣你登陸Ceph組件所在的Pod里,是沒法去獲取集群狀態,以及執行CLI命令,這時需要部署Ceph toolbox

# kubectl apply -f toolbox.yaml

deployment.apps/rook-ceph-tools created

# kubectl -n rook-ceph get pods -o wide | grep ceph-tools

rook-ceph-tools-7cf4cc7568-m6wbb 1/1 Running 0 84s 20.0.20.206 k8s-node03 <none> <none>

# kubectl -n rook-ceph exec -it rook-ceph-tools-7cf4cc7568-m6wbb bash

[root@k8s-node03 /]# ceph status

cluster:

id: aa31c434-13cd-4858-9535-3eb6fa1a441c

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 6h)

mgr: a(active, since 6h)

osd: 3 osds: 3 up (since 6h), 3 in (since 6h)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 144 GiB / 147 GiB avail

pgs:

[root@k8s-node03 /]# ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 147 GiB 144 GiB 4.7 MiB 3.0 GiB 2.04

TOTAL 147 GiB 144 GiB 4.7 MiB 3.0 GiB 2.04

POOLS:

POOL ID STORED OBJECTS USED %USED MAX AVAIL

7.創建Pool和StorageClass

# kubectl apply -f flex/storageclass.yaml

cephblockpool.ceph.rook.io/replicapool created

storageclass.storage.k8s.io/rook-ceph-block created# kubectl get storageclass

NAME PROVISIONER AGE

rook-ceph-block ceph.rook.io/block 50s

8.創建一個PVC

# cat cephfs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cephfs-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: rook-ceph-block

# kubectl apply -f cephfs-pvc.yaml

persistentvolumeclaim/cephfs-pvc created# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephfs-pvc Bound pvc-cc158fa0-30f9-420b-96c8-b03b474eb9f7 10Gi RWO rook-ceph-block 4s

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。