您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

因為之前在項目中一直使用Tensorflow,最近需要處理NLP問題,對Pytorch框架還比較陌生,所以特地再學習一下pytorch在自然語言處理問題中的簡單使用,這里做一個記錄。

一、Pytorch基礎

首先,第一步是導入pytorch的一系列包

import torch import torch.autograd as autograd #Autograd為Tensor所有操作提供自動求導方法 import torch.nn as nn import torch.nn.functional as F import torch.optim as optim

1)Tensor張量

a) 創建Tensors

#tensor x = torch.Tensor([[1,2,3],[4,5,6]]) #size為2x3x4的隨機數隨機數 x = torch.randn((2,3,4))

b) Tensors計算

x = torch.Tensor([[1,2],[3,4]]) y = torch.Tensor([[5,6],[7,8]]) z = x+y

c) Reshape Tensors

x = torch.randn(2,3,4) #拉直 x = x.view(-1) #4*6維度 x = x.view(4,6)

2)計算圖和自動微分

a) Variable變量

#將Tensor變為Variable x = autograd.Variable(torch.Tensor([1,2,3]),requires_grad = True) #將Variable變為Tensor y = x.data

b) 反向梯度算法

x = autograd.Variable(torch.Tensor([1,2]),requires_grad=True) y = autograd.Variable(torch.Tensor([3,4]),requires_grad=True) z = x+y #求和 s = z.sum() #反向梯度傳播 s.backward() print(x.grad)

c) 線性映射

linear = nn.Linear(3,5) #三維線性映射到五維 x = autograd.Variable(torch.randn(4,3)) #輸出為(4,5)維 y = linear(x)

d) 非線性映射(激活函數的使用)

x = autograd.Variable(torch.randn(5)) #relu激活函數 x_relu = F.relu(x) print(x_relu) x_soft = F.softmax(x) #softmax激活函數 print(x_soft) print(x_soft.sum())

output:

Variable containing: -0.9347 -0.9882 1.3801 -0.1173 0.9317 [torch.FloatTensor of size 5] Variable containing: 0.0481 0.0456 0.4867 0.1089 0.3108 [torch.FloatTensor of size 5] Variable containing: 1 [torch.FloatTensor of size 1] Variable containing: -3.0350 -3.0885 -0.7201 -2.2176 -1.1686 [torch.FloatTensor of size 5]

二、Pytorch創建網絡

1) word embedding詞嵌入

通過nn.Embedding(m,n)實現,m表示所有的單詞數目,n表示詞嵌入的維度。

word_to_idx = {'hello':0,'world':1}

embeds = nn.Embedding(2,5) #即兩個單詞,單詞的詞嵌入維度為5

hello_idx = torch.LongTensor([word_to_idx['hello']])

hello_idx = autograd.Variable(hello_idx)

hello_embed = embeds(hello_idx)

print(hello_embed)

output:

Variable containing: -0.6982 0.3909 -1.0760 -1.6215 0.4429 [torch.FloatTensor of size 1x5]

2) N-Gram 語言模型

先介紹一下N-Gram語言模型,給定一個單詞序列 ,計算 ,其中 是序列的第 個單詞。

import torch import torch.nn as nn import torch.nn.functional as F import torch.autograd as autograd import torch.optim as optim from six.moves import xrange

對句子進行分詞:

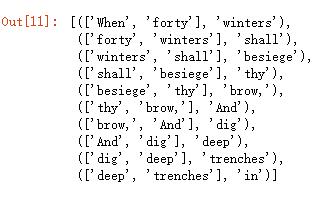

context_size = 2 embed_dim = 10 text_sequence = """When forty winters shall besiege thy brow, And dig deep trenches in thy beauty's field, Thy youth's proud livery so gazed on now, Will be a totter'd weed of small worth held: Then being asked, where all thy beauty lies, Where all the treasure of thy lusty days; To say, within thine own deep sunken eyes, Were an all-eating shame, and thriftless praise. How much more praise deserv'd thy beauty's use, If thou couldst answer 'This fair child of mine Shall sum my count, and make my old excuse,' Proving his beauty by succession thine! This were to be new made when thou art old, And see thy blood warm when thou feel'st it cold.""".split() #分詞 trigrams = [ ([text_sequence[i], text_sequence[i+1]], text_sequence[i+2]) for i in xrange(len(text_sequence) - 2) ] trigrams[:10]

分詞的形式為:

#建立vocab索引

vocab = set(text_sequence)

word_to_ix = {word: i for i,word in enumerate(vocab)}

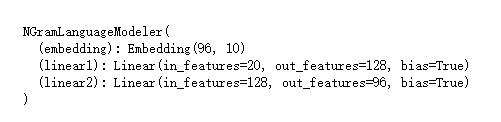

建立N-Gram Language model

#N-Gram Language model class NGramLanguageModeler(nn.Module): def __init__(self, vocab_size, embed_dim, context_size): super(NGramLanguageModeler, self).__init__() #詞嵌入 self.embedding = nn.Embedding(vocab_size, embed_dim) #兩層線性分類器 self.linear1 = nn.Linear(embed_dim*context_size, 128) self.linear2 = nn.Linear(128, vocab_size) def forward(self, input): embeds = self.embedding(input).view((1, -1)) #2,10拉直為20 out = F.relu(self.linear1(embeds)) out = F.relu(self.linear2(out)) log_probs = F.log_softmax(out) return log_probs

輸出模型看一下網絡結構

#輸出模型看一下網絡結構 model = NGramLanguageModeler(96,10,2) print(model)

定義損失函數和優化器

#定義損失函數以及優化器 loss_function = nn.NLLLoss() optimizer = optim.SGD(model.parameters(),lr = 0.01) model = NGramLanguageModeler(len(vocab), embed_dim, context_size) losses = []

模型訓練

#模型訓練 for epoch in xrange(10): total_loss = torch.Tensor([0]) for context, target in trigrams: #1.處理數據輸入為索引向量 #print(context) #注:python3中map函數前要加上list()轉換為列表形式 context_idxs = list(map(lambda w: word_to_ix[w], context)) #print(context_idxs) context_var = autograd.Variable( torch.LongTensor(context_idxs) ) #2.梯度清零 model.zero_grad() #3.前向傳播,計算下一個單詞的概率 log_probs = model(context_var) #4.損失函數 loss = loss_function(log_probs, autograd.Variable(torch.LongTensor([word_to_ix[target]]))) #反向傳播及梯度更新 loss.backward() optimizer.step() total_loss += loss.data losses.append(total_loss) print(losses)

以上這篇Pytorch在NLP中的簡單應用詳解就是小編分享給大家的全部內容了,希望能給大家一個參考,也希望大家多多支持億速云。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。