您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

pytorch中如何只讓指定變量向后傳播梯度?

(或者說如何讓指定變量不參與后向傳播?)

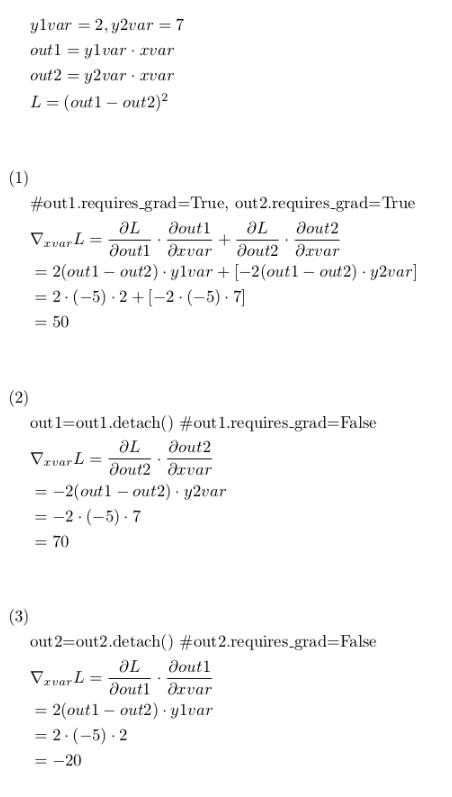

有以下公式,假如要讓L對xvar求導:

(1)中,L對xvar的求導將同時計算out1部分和out2部分;

(2)中,L對xvar的求導只計算out2部分,因為out1的requires_grad=False;

(3)中,L對xvar的求導只計算out1部分,因為out2的requires_grad=False;

驗證如下:

#!/usr/bin/env python2

# -*- coding: utf-8 -*-

"""

Created on Wed May 23 10:02:04 2018

@author: hy

"""

import torch

from torch.autograd import Variable

print("Pytorch version: {}".format(torch.__version__))

x=torch.Tensor([1])

xvar=Variable(x,requires_grad=True)

y1=torch.Tensor([2])

y2=torch.Tensor([7])

y1var=Variable(y1)

y2var=Variable(y2)

#(1)

print("For (1)")

print("xvar requres_grad: {}".format(xvar.requires_grad))

print("y1var requres_grad: {}".format(y1var.requires_grad))

print("y2var requres_grad: {}".format(y2var.requires_grad))

out1 = xvar*y1var

print("out1 requres_grad: {}".format(out1.requires_grad))

out2 = xvar*y2var

print("out2 requres_grad: {}".format(out2.requires_grad))

L=torch.pow(out1-out2,2)

L.backward()

print("xvar.grad: {}".format(xvar.grad))

xvar.grad.data.zero_()

#(2)

print("For (2)")

print("xvar requres_grad: {}".format(xvar.requires_grad))

print("y1var requres_grad: {}".format(y1var.requires_grad))

print("y2var requres_grad: {}".format(y2var.requires_grad))

out1 = xvar*y1var

print("out1 requres_grad: {}".format(out1.requires_grad))

out2 = xvar*y2var

print("out2 requres_grad: {}".format(out2.requires_grad))

out1 = out1.detach()

print("after out1.detach(), out1 requres_grad: {}".format(out1.requires_grad))

L=torch.pow(out1-out2,2)

L.backward()

print("xvar.grad: {}".format(xvar.grad))

xvar.grad.data.zero_()

#(3)

print("For (3)")

print("xvar requres_grad: {}".format(xvar.requires_grad))

print("y1var requres_grad: {}".format(y1var.requires_grad))

print("y2var requres_grad: {}".format(y2var.requires_grad))

out1 = xvar*y1var

print("out1 requres_grad: {}".format(out1.requires_grad))

out2 = xvar*y2var

print("out2 requres_grad: {}".format(out2.requires_grad))

#out1 = out1.detach()

out2 = out2.detach()

print("after out2.detach(), out2 requres_grad: {}".format(out1.requires_grad))

L=torch.pow(out1-out2,2)

L.backward()

print("xvar.grad: {}".format(xvar.grad))

xvar.grad.data.zero_()

pytorch中,將變量的requires_grad設為False,即可讓變量不參與梯度的后向傳播;

但是不能直接將out1.requires_grad=False;

其實,Variable類型提供了detach()方法,所返回變量的requires_grad為False。

注意:如果out1和out2的requires_grad都為False的話,那么xvar.grad就出錯了,因為梯度沒有傳到xvar

補充:

volatile=True表示這個變量不計算梯度, 參考:Volatile is recommended for purely inference mode, when you're sure you won't be even calling .backward(). It's more efficient than any other autograd setting - it will use the absolute minimal amount of memory to evaluate the model. volatile also determines that requires_grad is False.

以上這篇在pytorch中實現只讓指定變量向后傳播梯度就是小編分享給大家的全部內容了,希望能給大家一個參考,也希望大家多多支持億速云。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。